Glaze it 420

Can Glaze really protect your art from AI?

9/5/25 Edit: Adding a clarification up top that the mini experiment I share below is quite limited and therefore not perfect! I used a pretty simple, somewhat trendy piece of art for this experiment, so it’s possible that Glaze could work more effectively for more complex or niche styles.

My purpose in sharing it is to simply provide information: I tried this, it yielded that. I believe it is important to test out the tools we rely on to protect our artwork from image generators, instead of blindly trusting that they will work as intended in every situation.

But to be very clear: I am not sharing this to discourage artists from protecting their work against AI. I am sharing this to encourage artists to interrogate their tools.

Unfortunately, sometimes testing things out means feeding our art to image generators — a thing that many artists want to avoid. That’s fair. It is up to your discretion if you’d like to submit one or two of your pieces to an image generating system for the sake of testing. If you do test these systems, it can be helpful to share what you find with other artists who don’t want to freely give their pieces to an image generator database. This is why I am sharing here. I tried this tiny, limited experiment. This is what I found.

You may find that Glaze yields better results in protecting your style — that’s great! Please share that! Maybe you want to use Glaze because even a minimal amount of protection against AI is worth it for you—love that! I am never going to shame you for trying to protect your art however you can. I am also never going to shame a person who chooses not to use Glaze to protect their art. It is wiser for us to treat each other with kindness and grace, even if we have different viewpoints on this. It makes our community of artists stronger.

Anyway, thank you for being here. End of edit. :)

Hiii!

I’m coming off the heels of a successful and somewhat spicy talk centered around artists and AI. If you missed it, the main point was that understanding the limitations, impacts, news and etc. surrounding this technology empowers you to effectively defend your choice to limit AI use in your workflow. We had a great discussion about practical use cases for AI, having boundaries with it, and educating clients about the copyright issues surrounding it. You can access the slides in my resources hub if you’re interested—there are plenty of links to a bunch of sources and further reading.

Anyhoo, today I am sharing an experiment I ran using Chat GPT and a program called Glaze a month or so ago. I wanted to see how effective Glaze is at protecting my style against AI, so read on if you’re interested in seeing how that went! If not, I will have more art to share with you next edition. :)

Can Glaze Protect Your Art From AI?

Until a few months ago I hadn’t paid much attention to AI. In fact, I would love to ignore it, but its impact on the creative field makes that aim difficult (and I now have an obsession with reading about it). AI generated rip-offs abound, jobs requiring “AI-native” abilities flood online boards, and opportunities for human artists shrink as the masses slurp the AI slop. Grr!

But wait! Have you heard about this thing called Glaze? It’s this program from the University of Chicago that you download, drop an image into, and then it spits out a replication of your image that’s supposedly difficult for AI to imitate. Its goal is to act as a protection for an artist’s style.

I wanted to know how well Glaze could protect a piece of my art against Open AI’s DALL·E. So, I initiated a mini experiment. Could DALL·E replicate my style from a Glazed image? The results were… underwhelming.

Some Details about Glaze

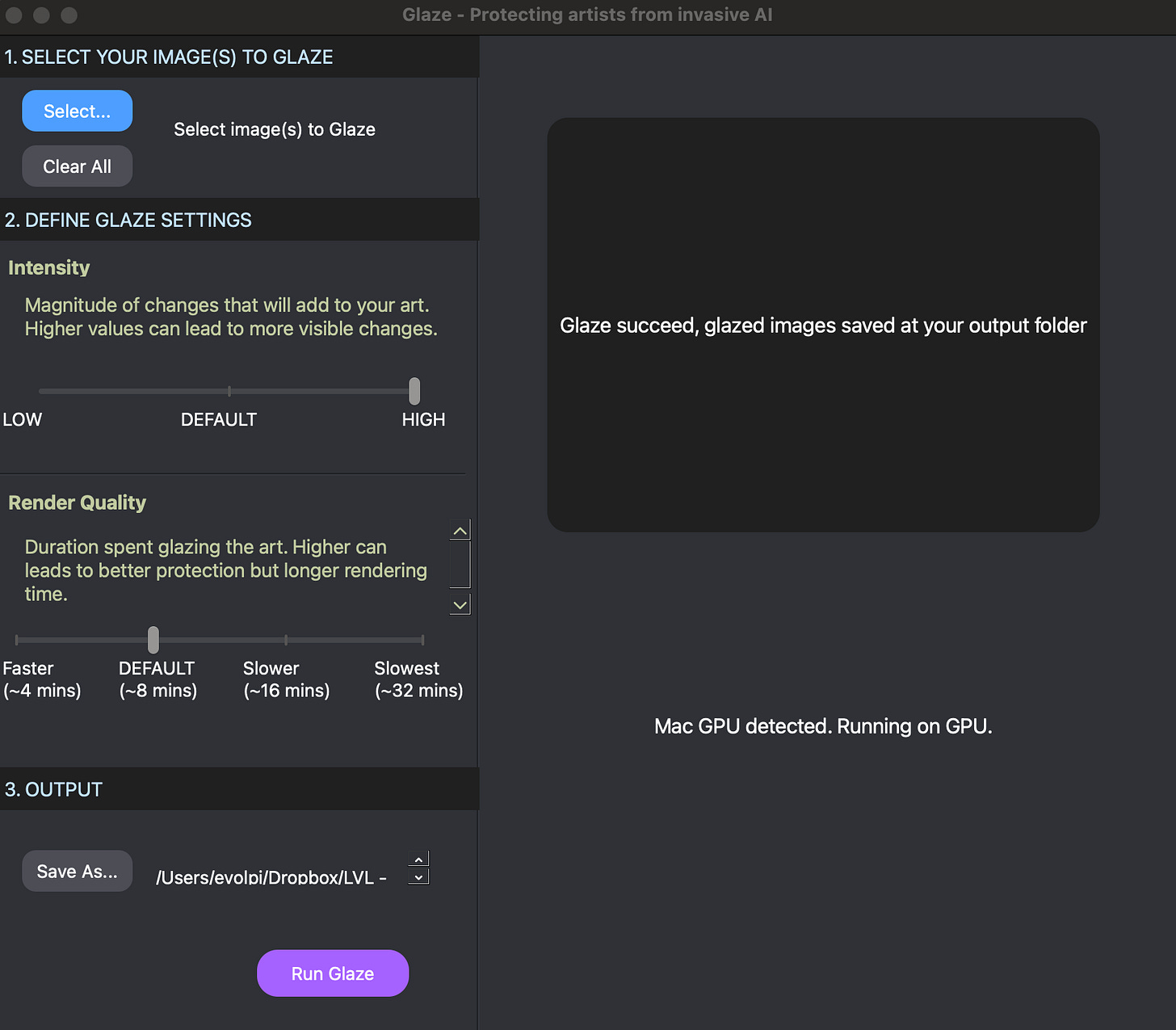

To back up a bit, I’ll show you what the Glaze interface looks like. There are two toggles: one that adjusts the intensity of changes added to the image, one that adjusts the render quality.

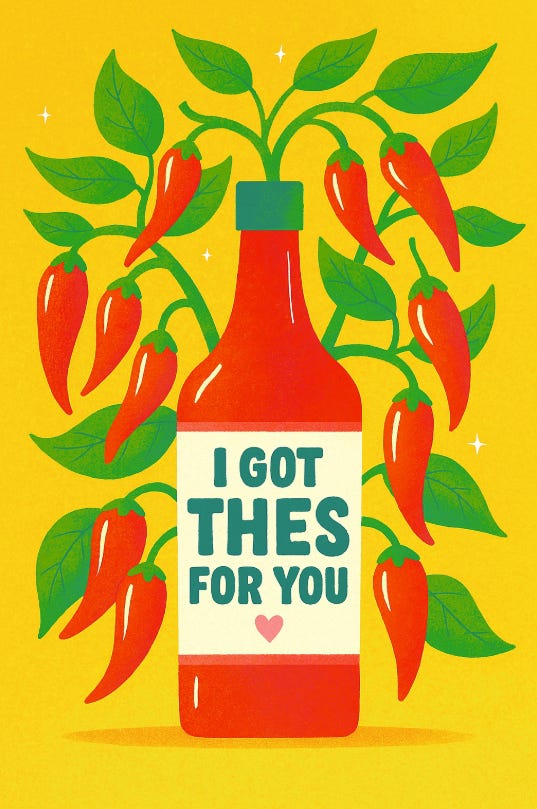

For my experiment, I chose a fairly simple, flat design and dropped it into Glaze. Settings: high intensity, default render quality.

The Glazed output had some pretty obvious distortion in the central part of the image, which might go unnoticed when shared at small sizes. However, on a portfolio site where you want the work to display large and really look its best, that’s a bit problematic.

Nonetheless, I had a Glazed and an un-Glazed version of the same image. I was ready for testing.

Testing Glaze with ChatGPT/DALL·E

I dropped the image with the Glaze cloak into ChatGPT, which generates images using the DALL·E engine. The text prompt was as follows:

in this style, create an image of a bottle of hot sauce with a label stating “I got the hots for you”, surrounded by a chili pepper plant

Below is the image Chat GPT served up in response:

I don’t think it did a very good job of replicating the style, but I think you could argue that—despite referencing a Glazed image—yes it replicated the style of the source. However, the image contains some well-known pitfalls of AI imagery, namely misspelled words and floating / disconnected items.

I should mention that ChatGPT seemed to struggle loading this image, and I’m unsure if that relates to Glaze or not. The murky grey box where the generated image was supposed to appear had a little “copy” button underneath. I copy-pasted it into the text box and finally viewed it.

Testing the Unglazed Control

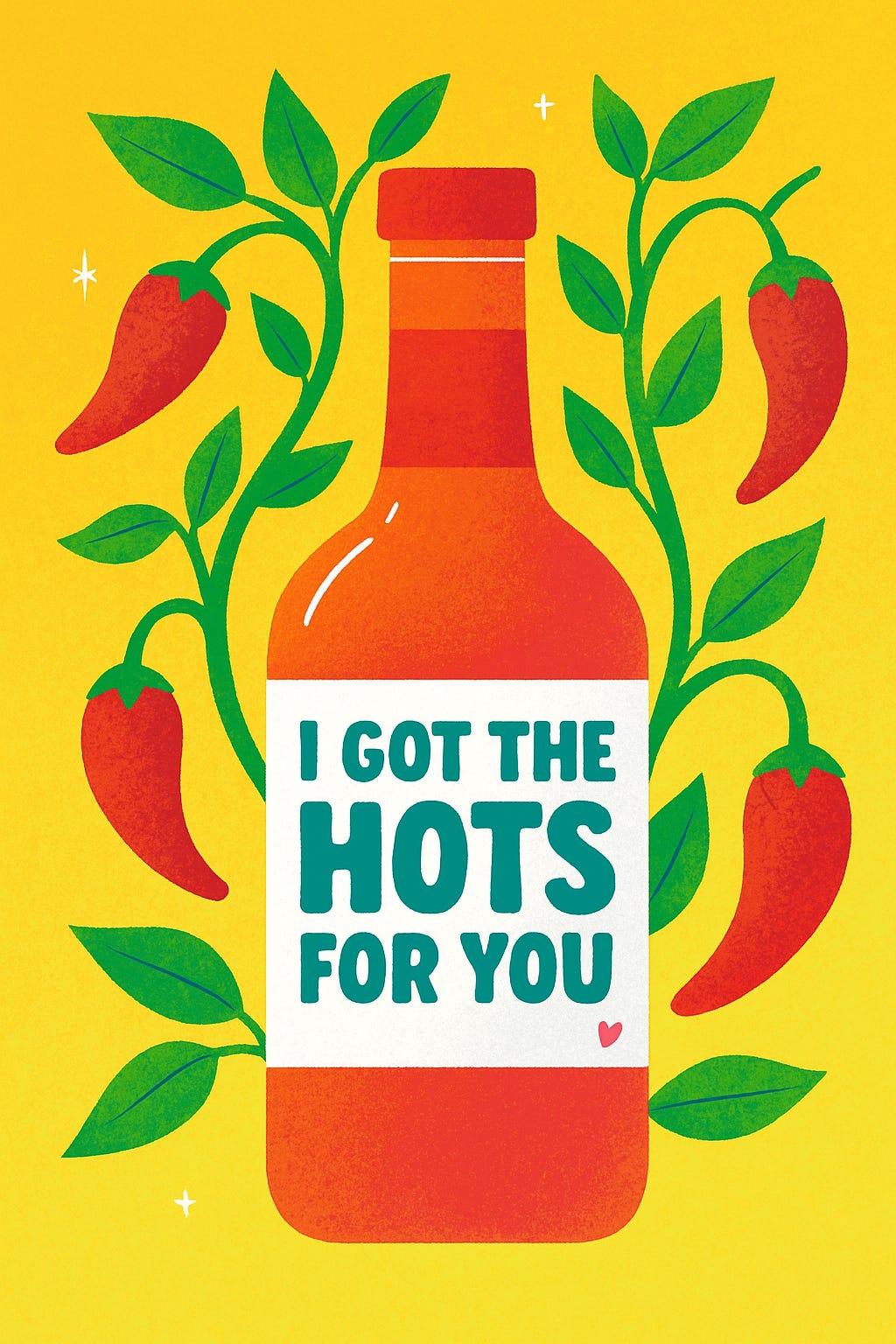

Would ChatGPT/DALL·E produce an image without the spelling or continuity errors when referencing an un-Glazed image? Could it develop a more interesting interpretation of the style? I opened a new chat window and dropped in the same text prompt with the un-Glazed reference image. This is what it served up:

I gotta say, I find this rendering no more interesting than the first—though this does have correct spelling and a bit better continuity.

To take this experiment a bit further, I requested the following modification in both chats:

Can you modify this image to include chili pepper flowers in the foliage?

Things got a little more interesting this time.

At this point, all the flaws are typical of AI—Glaze or no Glaze. Spelling is still wrong in the left image. Both images have disjointed peppers and pieces that are oddly joined. The ladybug in the right image just looks scary? Just hot, sloppy garbage all around.

Conclusion

Well… sadly, I did not find the current version of Glaze effective at protecting my particular style, though it may warrant more tests. If Glaze can consistently produce spelling errors in AI replications, I’ll totally use it on lettering pieces before sharing on Substack, etc. Otherwise, I’m not very impressed with how it holds up against the current version of DALL·E.

Maybe Glaze is more effective against other image-to-image generators, or works best when corrupting a full data set for training. I’d be super interested to know if you’ve had better luck with it protecting your art!

I guess, to end on an optimistic note: even though Glaze didn’t protect my style as much as I hoped it would (in this one mini experiment), I found the AI output to be soooo subpar. It reminded me of all the choices artists make as we create: details, exaggerations, unexpected colors… all the things that we test and iterate on as we sculpt our designs and imbue them with aspects of our personalities. You can see the care that goes into the work, and it sets human art—REAL art—apart. I crave human artistry more than I ever have!

So, anyway, I hope you keep creating and you keep sharing—whether you Glaze or not. There’s also Nightshade and Mist to try—and I’m sure plenty others. If you’ve had luck with them, let me know!

Hasta luego,

-Liz :)

P.S. (slightly unrelated) - If you need an AI image detector, check out this review of the top 8! Super informative.